A Have I Been Pwned MCP Server for Claude

April 2, 2025

Dev Container Setup for Hugo

April 2, 2025This post will explore the new support in Azure Application Gateway for Containers (AGC) for the overlay network option in Azure Kubernetes Service (AKS) as documented in https://aka.ms/agc/overlay, as well as whether you can see traffic between AGC and AKS with VNet Flow Logs. This blog is part of a series:

- 1. Introduction to AGC architecture and components

- 2. Troubleshooting

- 3. Resource model expansion: end-to-end TLS

- 4. Overlay networking and VNet Flow Logs (this post)

What am I talking about?

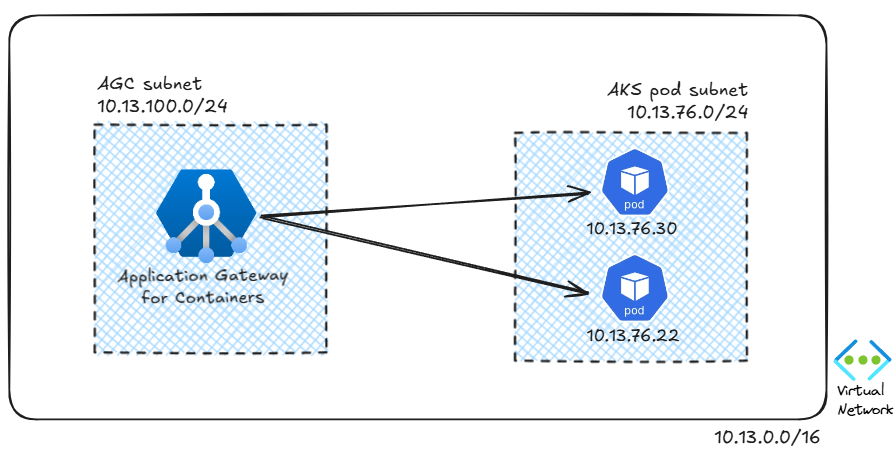

AKS clusters support different network implementations or Container Network Interface (CNI) plugins. The plugin that has been supported so far in AGC is the Azure CNI plugin, where pods receive IP addresses that are routable inside of the Virtual Network. Consequently, AGC can easily send traffic to the pods, both for health checks and actual application traffic:

However, if you don’t want that your pods take IP addresses from your VNet range, you can use an alternative network model, called overlay. In this approach the pods take their IP addresses from a range that does not have anything to do with the VNet:

It works!

If we have a look at the AGC metrics, we will see that the health checks are OK. This indicates that AGC can successfully reach the Kubernetes pods using health probes, even if the pods have non-routable IP addresses:

And as you would expect, sending a request to the API works too (the /api/ip endpoint returns some information about the environment). By the way, I have replaced my actual public IP address at home with 1.2.3.4. From the output you can see that the pod has an IP address from the pod CIDR (10.244.0.147) and that the request is coming from AGC’s subnet 10.13.100.0/24, more concretely from 10.13.100.6 (one of the AGC instances under the cover).

❯ curl http://$fqdn/api/ip

{

"host": "gudch2dqgbdwfzak.fz32.alb.azure.com",

"my_default_gateway": "169.254.23.0",

"my_dns_servers": "['10.0.0.10']",

"my_private_ip": "10.244.0.147",

"my_public_ip": "172.212.55.54",

"path_accessed": "gudch2dqgbdwfzak.fz32.alb.azure.com/api/ip",

"sql_server_fqdn": "None",

"sql_server_ip": "False",

"x-forwarded-for": "['1.2.3.4']",

"your_address": "10.13.100.6",

"your_browser": "None",

"your_platform": "None"

}

VNet Flow Logs

But how does this work? Spoiler alert: I got a big surprise here, because I think that the Overlay CNI plugin for AKS would involve some kind of overlay (duh!), but it turns out that it doesn’t!

Back to our lab: you can enable VNet Flow Logs to actually see the traffic going on here. For example, this query would give us all traffic coming from the AGC’s subnet in the last 5 minutes:

As you see, traffic is coming from 10.13.100.6 (I had expected to see a second instance there, something like 10.13.100.7, probably a glitch of the preview). The destination IP addresses are the pod’s actual, non-routable IP addresses, as you can see looking at the pods from the Kubernetes API:

❯ k get pod -n $app_ns -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES yadaapi-5f9f964f75-2tnc8 1/1 Running 0 166m 10.244.0.147 aks-nodepool1-30661543-vmss000000 yadaapi-5f9f964f75-bg76s 1/1 Running 0 3h1m 10.244.0.131 aks-nodepool1-30661543-vmss000000 ❯ k get pod -n $tls_app_ns -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES yadatls-6b655b7bdd-v2frs 2/2 Running 0 102m 10.244.0.203 aks-nodepool1-30661543-vmss000000 yadatls-6b655b7bdd-z9vkh 2/2 Running 0 110m 10.244.0.52 aks-nodepool1-30661543-vmss000000

Just to make sure, these addresses do not belong to the VNet, while the nodes’ IP addresses do (I have a single node in this test cluster):

❯ k get node -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME aks-nodepool1-30661543-vmss000000 Ready none 3h40m v1.30.9 10.13.76.4 none Ubuntu 22.04.5 LTS 5.15.0-1081-azure containerd://1.7.25-1

So where do the pod IP addresses come from? They are instead taken from a range known as podCidr that is part of the properties of an AKS cluster and can be defined at creation time:

❯ az aks show -n $aks_name -g $rg --query networkProfile.podCidr -o tsv 10.244.0.0/16

And contrary to how Kubenet worked, no routing table applied in the Kubernetes VNet:

So how does this all work then? This is yet another of those things that we can attribute to the SDN voodoo under the hood. Contrary to what the name “Overlay” might imply, there is no overlay network at play here, as Flannel or Weave Net (rest in peace) used to do with VXLAN. Instead, Azure SDN is able to transport traffic from the AGC to the containers unencapsulated, which is a huge benefit from a visibility perspective.

Conclusion

It turns out that using AGC for AKS clusters in Overlay mode is sort of boring from a user perspective: nothing changes. One interesting bit of information is that traffic between AGC and AKS can be inspected via VNet Flow Logs, regardless the CNI plugin that the cluster might be using.

Did I forget to look at anything here? Let me know in the comments!