Planned Change Prevents Microsoft Tenant Domain Enumeration

July 22, 2025What models can I use for free while prototyping?

July 22, 2025As generative AI adoption accelerates, more professionals are recognizing the limitations of basic Retrieval-Augmented Generation (RAG). Many point out that traditional RAG provides only superficial analysis and that search results often appear as isolated points, rather than forming holistic insights. Most RAG implementations rely on single-query searches and summarization, which makes it difficult to explore information in depth or to perform repeated validation. These limitations become especially clear in complex enterprise scenarios.

Deep Research is designed to address these challenges. Unlike RAG, Deep Research refers to advanced capabilities where AI receives a user’s query and collects and analyzes information from a variety of perspectives to generate detailed reports. This approach enables much deeper insights and multi-dimensional analysis that are not possible with standard RAG. As a result, Deep Research provides better support for decision-making and problem-solving.

However, Deep Research features offered through commercial SaaS or PaaS solutions may not fully satisfy certain advanced enterprise requirements, especially in highly specialized fields. Therefore, this article focuses on the approach of building a custom orchestrator in-house and introduces methods for internalizing Deep Research capabilities.

Sample Code for Hand-Crafted Deep Research

Sample code for this approach is available on GitHub under the name Deep-Research-Agents. Please see the repository for detailed instructions and updates.

Comparing Deep Research Approaches

There are three primary approaches for implementing Deep Research in an enterprise:

| Approach | Overview | Advantages | Disadvantages |

|---|---|---|---|

| 1. SaaS | Use Deep Research through services like M365 Copilot or ChatGPT | Quick to implement and operate |

Fine-grained control and integration with existing systems are difficult |

| 2. PaaS | Use Deep Research features through APIs, for example Azure AI Foundry Agent | Integration with in-house systems is possible through API |

|

| 3. Hand-crafted Orchestrator | Develop and operate a custom orchestrator using open source tools, fully tailored to your requirements | Highly customizable and extensible | Development and maintenance are necessary |

Although building a hand-crafted orchestrator is more technically demanding, this approach provides outstanding extensibility and allows organizations to fully utilize proprietary algorithms and confidential data. For organizations with advanced technical capabilities and the need for specialized research, for example in manufacturing, healthcare, or finance, this method delivers clear benefits and can become a unique engine for competitive advantage.

Designing Hand-Crafted Deep Research

The design philosophy adopted for the internal Deep Research implementation is described below.

Implementation Strategies

There are two main patterns for implementing Deep Research:

| Pattern | Overview | Advantages | Disadvantages |

|---|---|---|---|

| Workflow-based | The workflow is strictly defined in advance, and tasks proceed step by step following this workflow. | Easy to implement; transparent process | Low flexibility; difficult to extend |

| Dynamic routing-based | Only the types of tasks are predefined. The AI dynamically determines which task to execute at runtime, based on the input. | Highly flexible and extensible | Requires expertise; dynamic nature of processing requires greater understanding |

Most open-source implementations use the workflow-based method, but the flexibility and extensibility required for advanced Deep Research make dynamic routing the preferred choice.

Architecture

For this project, the dynamic routing approach was used. The system is structured as a hierarchical multi-agent system, where a manager agent handles task management. Semantic Kernel’s Magentic Orchestration enables the manager to assign work to each specialized agent as necessary for effective problem-solving.

Why Use a Multi-Agent System?

A multi-agent system consists of multiple AI agents, each with its own area of expertise, working together and communicating to accomplish tasks. By adopting a structure similar to a human organization, these agents can divide and coordinate their work, making it possible to address more complex challenges than would be feasible for a single agent.

Another advantage of the multi-agent approach is transparency. The interactions and discussions between agents can be easily visualized, which improves the interpretability of results. Furthermore, recent academic research, such as Liu et al. (2025)[1], has reported that using a multi-agent architecture can lead to higher accuracy compared to single-agent approaches. For these reasons, we adopted the multi-agent method in this project.

What is Magentic Orchestration?

In this project, Magentic Orchestration enables dynamic routing between agents, functioning much like a manager who coordinates a team in a human organization.

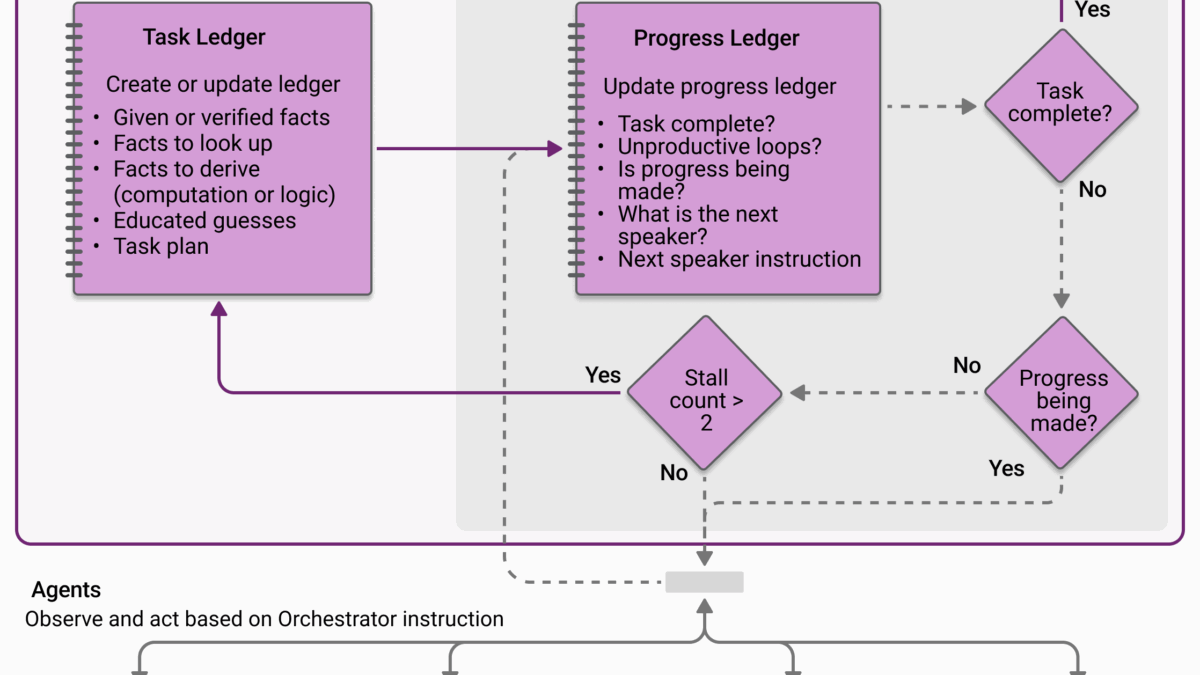

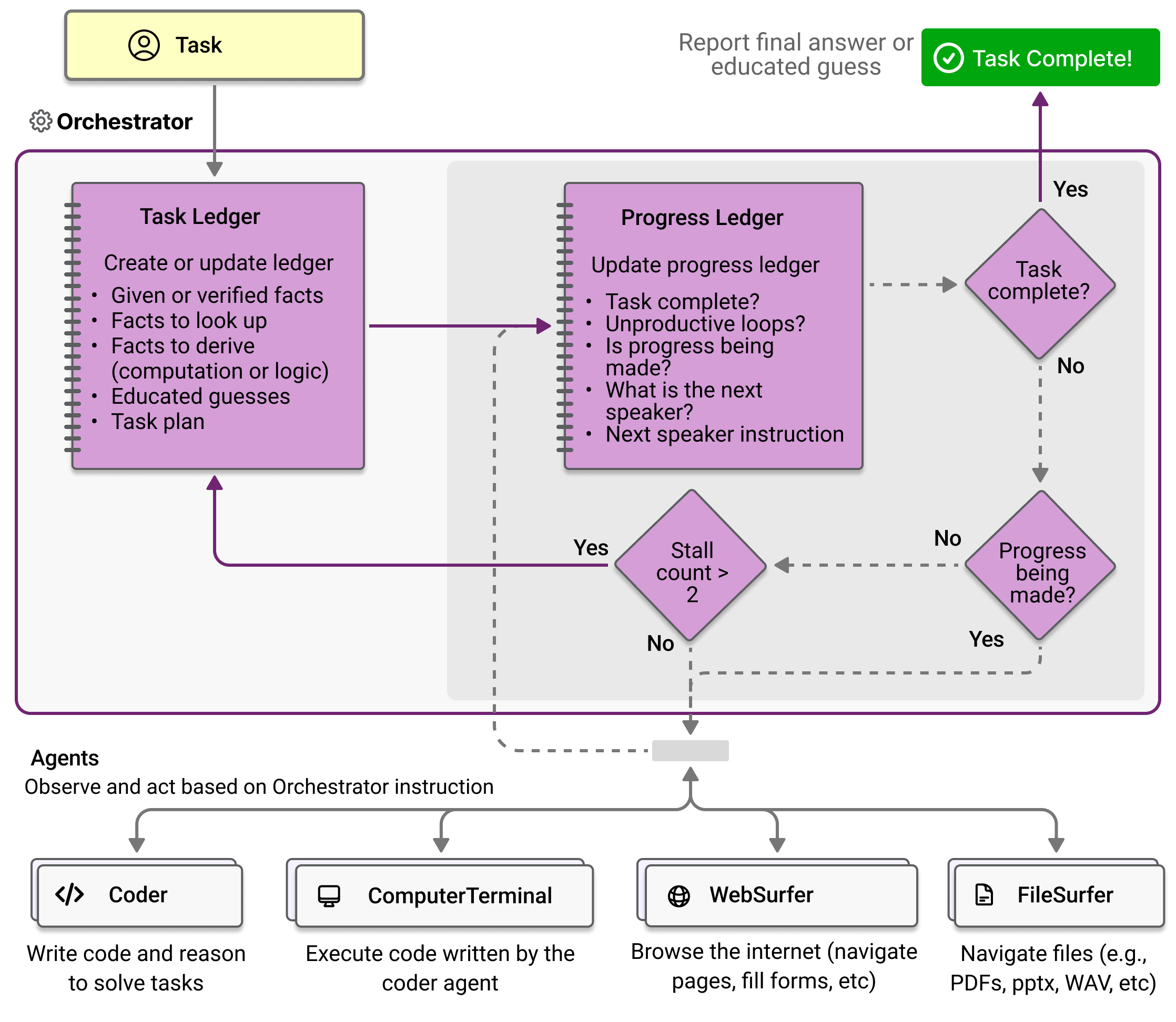

Magentic Orchestration is a mechanism for managing overall plans and task progress in a multi-agent system. It assigns work to each agent as needed, ensuring that tasks are distributed efficiently and progress is tracked across the entire process. The following diagram illustrates the typical workflow managed by Magentic Orchestration.

For a practical example, please refer to the official Semantic Kernel sample code, which demonstrates Magentic Orchestration in action:

https://github.com/microsoft/semantic-kernel/blob/main/python/samples/getting_started_with_agents/multi_agent_orchestration/step5_magentic.py

Agent Structure

This system employs Semantic Kernel’s Magentic Orchestration to coordinate a multi-agent team, with each agent assigned a specialized role inspired by a human research organization. The structure ensures clear role separation and promotes diversity in information gathering for comprehensive and reliable research outputs.

Information retrieval is directed by the Lead Researcher, who manages several Sub-Researchers. To enhance the breadth and balance of perspectives, three Sub-Researchers are assigned under the Lead Researcher, each with different temperature settings to increase the diversity of their responses.

- Magentic Orchestration: Oversees overall planning, manages task progress, assigns work to agents, and compiles the final report

- Lead Researcher: Develops the research plan, delegates specific research tasks to Sub-Researchers, and synthesizes their findings into a summary

- Sub-Researchers 1–3: Conduct searches and investigations, each with different behavioral settings to generate diverse results

- Credibility Critic: Evaluates the reliability and coverage of information sources, providing quality feedback

- Report Writer: Drafts the initial report based on all collected information

- Reflection Critic: Reviews the draft report and offers feedback to improve its quality

- Translator: Translates content as needed

- Citation Agent: Records and manages references, ensuring proper citations in the final report

- Lead Researcher: Develops the research plan, delegates specific research tasks to Sub-Researchers, and synthesizes their findings into a summary

This hierarchical agent structure allows the system to efficiently coordinate specialized tasks, producing reports that are thorough, trustworthy, and easy to review.

Overall Architecture

The overall architecture is designed to support both external web searches and internal document searches. For internal content, Azure AI Search is used to index and search enterprise documents, enabling secure and scalable access to proprietary knowledge. If you need to handle structured data, such as tables, you can store this information in Azure Table Storage and create searchable indexes using the Azure AI Search Table Storage Indexer. This approach allows the system to flexibly adapt to various data formats and makes internal research much more efficient

The sample code uses Tavily for external web search integration. When leveraging web search APIs or other third-party services, please review the relevant product terms and manage any risks related to information leakage or external query exposure according to your organization’s policies.

Sample Output

Below is an example of output generated by running the sample code. The total execution time is approximately seven minutes, with an LLM cost of $1.3. For further details, please refer to Appendix: Output Example Execution Information.

Execution Example

Input Query: Please summarize official case studies from five companies in Japan that utilize multi-agent systems powered by Azure AI/OpenAI. Provide a detailed report in Japanese, using only information from the companies’ official websites.

Output:

Executive Summary

This research systematically reviews the latest trends, system architectures, and results of five Japanese companies (NEC, Panasonic Connect, Fujitsu, KDDI, and Panasonic Holdings) that have published official case studies involving the use of Azure AI/OpenAI Service with multi-agent collaboration. The main findings are as follows:

- All five companies operate multiple task-specific agents under secure, enterprise-grade Azure OpenAI Service environments.

- Typical roles include search agents, specialized domain agents, and workflow/orchestration agents, with hybrid architectures combining RAG and SaaS integration being the most common.

- Measured benefits include a 20–40% reduction in search and summarization time, a 15% improvement in attack detection rates, and up to 30% reduction in manual workload.

- Future plans include scaling out to overseas locations and implementing advanced orchestration features such as meta-cognitive coordination.

Note: For this report, Panasonic Connect and Panasonic Holdings are treated as separate entities since they are independent legal companies and publish separate official data. Thus, five distinct companies are included.

Detailed Findings

- 1. NEC

- Background: The need to handle varying response granularities across departments and tasks highlighted the importance of coordinating multiple agents.

- Architecture: The “NEC AI Agent for NGS” consists of Search, Office, Task, and Individual Agents, autonomously collaborating through a hybrid setup that combines Azure OpenAI Service and internal data.

- Results: Substantial reduction in document search time and expansion of user departments.

- Outlook: Plans to add orchestration layers and extend use to decision-making and development support.

- 2. Panasonic Connect

- Background: Needed a generative AI foundation usable across manufacturing, logistics, and retail domains.

- Architecture: Combined LangChain, LangGraph, Chainlit, and Azure OpenAI to deploy three agent types (Navigator, Workflow, and General-purpose) simultaneously.

- Results: Achieved up to 30% reduction in workload for production, logistics, and sales tasks.

- Outlook: Plans to scale to over 100 projects within FY2025 and extend to code generation and automated design.

- 3. Fujitsu

- Background: Needed to automate vulnerability detection and response due to increasing complexity of cyber attacks.

- Architecture: The “Multi-AI Agent Security Technology” uses specialized agents for attack analysis, defense planning, and recovery, coordinated with Azure OpenAI Service.

- Results: 15% increase in unknown attack detection rates; reduced time to propose countermeasures by over half.

- Outlook: Aims to deploy globally in SOCs by 2026 and achieve autonomous defense.

- 4. KDDI

- Background: Developed an enterprise AI platform integrating multiple AI services for business clients.

- Architecture: Integrates four types of AI agents through a multi-cloud gateway with existing systems.

- Results: Reduced workload by 40% across call center summarization, EC product description generation, and manual creation tasks.

- Outlook: Plans to coordinate agents for network failure analysis and traffic optimization.

- 5. Panasonic Holdings

- Background: Established a common AI foundation to safely use generative AI across business units and improve efficiency.

- Architecture: “PX-GPT” deploys department-specific agents on the intranet, with Azure OpenAI Service at the core, serving as a multi-agent foundation across departments.

- Results: 28,000 users within four months of release; average 20% reduction in search and summarization time.

- Outlook: Plans to expand to overseas offices and integrate with knowledge-sharing platforms by FY2025.

Conclusions and Recommendations

Japanese enterprises are leveraging the security and scalability of Azure OpenAI Service to connect multiple role-specific AI agents, significantly improving business efficiency and quality. Key points include:

- Most organizations use RAG-based search agents as the starting point for information retrieval, followed by deeper analysis by specialized agents.

- Performance indicators such as time reduction and detection rates are quantitatively demonstrated, confirming real-world benefits.

- Future directions include enhancing orchestration capabilities and expanding use cases to global locations.

Recommended actions for enterprises:

- Automate orchestration: Enhance dynamic task allocation and agent meta-cognition to handle complex workflows.

- Strengthen governance: Develop clear guidelines for generative AI usage and maintain audit logs to minimize risks of sensitive data leaks or hallucinations.

- Accelerate PoC-to-production: Redesign business processes to be agent-friendly, and use metrics and ROI from small-scale pilots to enable fast transition to full production use.

Source Documentation

# Type Title URL or Document ID Date/Version Key Excerpt 1 Web Work DX 2025 Session 4 (NEC) https://jpn.nec.com/digital-wp/workdx2025/document/pdf/workdx2025_session4.pdf 2025-01 “Search Agent, Office Agent, Task Agent, and Individual Agent autonomously collaborate.” 2 Web Large Language Models Technical Introduction (Panasonic Connect) https://group.connect.panasonic.com/psnrd/technology/large-language-models.html No date – Accessed 2025-07-14 “Flexible AI agent customization with LangChain, LangGraph, Chainlit, and Azure OpenAI.” 3 Web Panasonic Connect Accelerates LLM Agent Utilization https://news.panasonic.com/jp/press/jn250707-2 2025-07-07 “Multiple navigator, workflow, and general-purpose AI agents deployed.” 4 Web World’s First Multi-AI Agent Security Technology Developed (Fujitsu) https://pr.fujitsu.com/jp/news/2024/12/12.html 2024-12-12 “Multi-AI Agent Security Technology.” 5 Web Azure OpenAI Service for Enterprise (KDDI) https://biz.kddi.com/service/ms-azure/open-ai/ No date – Accessed 2025-07-14 “Combining multiple AI agents…” 6 Web KDDI Accelerates Enterprise DX with Multi-Cloud x Generative AI https://newsroom.kddi.com/news/detail/kddi_pr-958.html 2023-09-05 “By combining four models…” 7 Web Launch of In-house Generative AI Platform ‘PX-GPT’ (Panasonic) https://news.panasonic.com/jp/press/jn230414-1 2023-04-14 “Multi-agent platform available across departments.”

References

- Work DX 2025 Session 4, NEC, https://jpn.nec.com/digital-wp/workdx2025/document/pdf/workdx2025_session4.pdf

- Large Language Models Technical Introduction, Panasonic Connect, https://group.connect.panasonic.com/psnrd/technology/large-language-models.html

- Panasonic Connect Accelerates LLM Agent Utilization, Panasonic Newsroom, https://news.panasonic.com/jp/press/jn250707-2

- World’s First Multi-AI Agent Security Technology Developed, Fujitsu, https://pr.fujitsu.com/jp/news/2024/12/12.html

- Azure OpenAI Service for Enterprise, KDDI, https://biz.kddi.com/service/ms-azure/open-ai/

- KDDI Accelerates Enterprise DX with Multi-Cloud x Generative AI, KDDI Newsroom, https://newsroom.kddi.com/news/detail/kddi_pr-958.html

- Launch of In-house Generative AI Platform ‘PX-GPT’, Panasonic Newsroom, https://news.panasonic.com/jp/press/jn230414-1

Conclusion

In summary, this article has demonstrated the following key points regarding the in-house implementation of Deep Research:

- Flexible deep dives and multi-perspective analysis: Hand-crafted Deep Research enables organizations to conduct detailed and iterative analysis, allowing solutions to be tailored to unique business requirements. This level of flexibility is difficult to achieve with RAG approaches.

- Creation of competitive advantage: While custom implementation requires initial investment and development effort, it allows advanced organizations to build Deep Research capabilities that are fully aligned with their own needs, providing a significant source of competitive differentiation.

- High expertise, extensibility, and transparency with multi-agent and dynamic routing: By combining multi-agent systems with dynamic routing, organizations can achieve high accuracy and adaptability. This architecture also makes it easy to add new features or data sources in the future.

- Fast and smooth adoption with open source sample code: By starting with the publicly available code on GitHub, organizations can quickly set up their own environments and begin evaluation with minimal risk.

I encourage you to leverage these approaches and try implementing Deep Research within your own organization.

Appendix: Output Example Execution Information

- Execution time: About 7 minutes

- Total LLM cost: $1.3

- o3: $0.34

- GPT-4.1: $0.96

- Token usage:

- o3:

- Input tokens (without cache): 93.2K

- Input tokens (cached): 46.4K

- Output tokens: 16.0K

- GPT-4.1:

- Input tokens (without cache): 358.1K

- Input tokens (cached): 302.7K

- Output tokens: 12.1K

- o3:

The processing cost and token usage for GPT-4.1 are particularly high due to handling of search results. To reduce costs, it is effective to adjust the number of Sub-Researchers running in parallel.