Mastering Microsoft Entra Private Access: Step-by-Step Deployment Guide

January 6, 2025

How AzureWatcher.com Tracks Azure Documentation Changes with AI

January 6, 2025Why Do Limits Exist?

Azure AI services allows us to build intelligent applications with prebuilt and customisable models. These services cover a wide range of applications, including natural language processing, translation, speech recognition, computer vision, and decision-making.

While these tools are powerful, they come with specific limits designed to ensure performance, reliability, and fair usage across the platform.

Azure’s documentation, though comprehensive, can sometimes feel overwhelming. This post aims to provide a clear and practical overview of how to navigate and overcome the limits of Azure AI services. While we’ll focus on Azure AI Language Services, many of these principles apply across all Azure AI offerings, with slight variations.

Understanding Azure AI Service Limits

Feature-Specific Limits

Each Azure AI service has unique limits tied to its features. Common examples include:

- Maximum characters per document

- Maximum request size

- Documents per request (see bulk requests below)

These limits vary by service and are detailed in Microsoft’s documentation. For instance, you can review the specific limits for AI Language Services here.

Pricing Tier Limits

Azure AI services impose rate limits based on the pricing tier you select. Each tier specifies:

- Maximum transactions per second (TPS)

- Maximum transactions per minute (TPM)

The free (S0/F0) tier offers limited requests per month and is stricter on the amount of requests per second compared to paid tier(s). For production applications, upgrading to a higher tier is often required to meet performance needs.

Bulk Requests: A Performance Boost

Certain features allow bulk requests, where multiple inputs can be processed in a single API call. This approach significantly increases throughput by reducing the number of calls made to the API.

However, there are some caveats:

- Synchronous processing has stricter limits, with capped document counts per request.

- Asynchronous processing offers far higher limits. For example:

- Language detection (async): up to 1,000 documents per request.

- Language detection (sync): capped at 25 documents per request.

Sample REST Call for Asynchronous Processing

Here’s an example REST call for bulk asynchronous processing in Azure AI Language Service:

{

“analysisInput”: {

“documents”: [

{ “id”: “1”, “text”: “Azure AI is great for language processing.” },

{ “id”: “2”, “text”: “Another example of bulk text input.” }

]

},

“tasks”: [

{

“kind”: “LanguageDetectionTask”,

“parameters”: {

“loggingOptOut”: false

}

}

]

}” style=”color:#d8dee9ff;display:none” aria-label=”Copy” class=”code-block-pro-copy-button”>

POST https:///language/:analyze-text?api-version=2022-05-01

{

"analysisInput": {

"documents": [

{ "id": "1", "text": "Azure AI is great for language processing." },

{ "id": "2", "text": "Another example of bulk text input." }

]

},

"tasks": [

{

"kind": "LanguageDetectionTask",

"parameters": {

"loggingOptOut": false

}

}

]

}Overcoming Limits with Self-Hosted Options

For users needing even greater flexibility, Microsoft offers Azure AI services in containers. This self-hosted approach provides significant advantages, including:

- Higher throughput by bypassing Azure’s PaaS (Platform-as-a-Service) rate limits.

- Local installations for enhanced performance in environments with limited internet bandwidth or strict data residency requirements.

By hosting Azure AI services in your own infrastructure, you gain full control over request limits, allowing for unrestricted scalability.

Global and Data Zone Considerations

Azure AI Language Service applies rate limits per resource and per feature, regardless of deployment region. For example:

- In the Standard tier, each feature (e.g., Sentiment Analysis or Key Phrase Extraction) has a rate limit of 1,000 requests per minute per resource.

- These limits are independent of other resources, even if they are deployed in the same or different regions.

This means that scaling the workload across multiple resources can help achieve higher throughput without being constrained by regional limits.

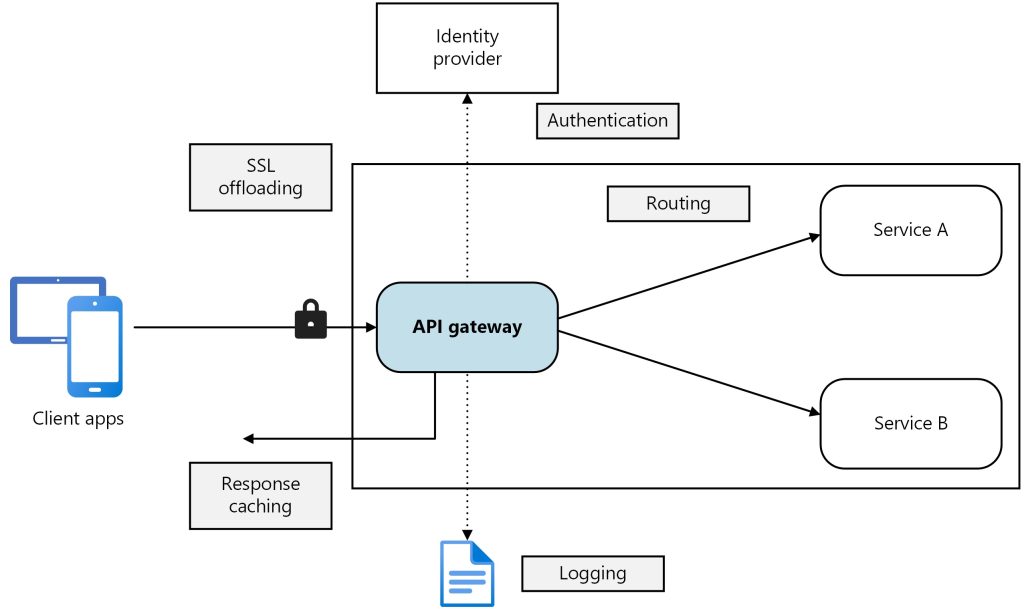

For load balancing and centralised logging, consider using Azure API Management to distribute traffic across multiple instances.

Monitoring and Diagnostics

The Azure AI Services API includes a “csp-billing-usage” header in its responses, providing details on resource consumption for each API call. For example:

csp-billing-usage: CognitiveServices.TextAnalytics.Language.Text.LanguageDetection=2,CognitiveServices.TextAnalytics.TextRecords=2This header indicates that the LanguageDetection operation consumed two units, as did the processing of TextRecords.

To gain deeper insights into usage and performance, you can enable Azure Diagnostics. The AzureDiagnostics and Usage tables in Log Analytics offer data for monitoring and analysing consumption usage.

Exploring Alternatives

While Azure AI services offer robust functionality, you may also explore alternative solutions like open-source libraries or third-party tools. However, these come with their own limitations.

For more on this topic, check out my previous posts:

- Azure AI Language Services vs. Open-Source NuGet Solutions

- Cloud vs. Open Source: Language Detection Tools Tested in Microservice Architectures

- Azure AI Service in Containers: Ultimate Flexibility Anywhere

Final Thoughts

Azure AI services are incredibly versatile, but their built-in limits require thoughtful solution design to avoid bottlenecks. Understanding and addressing these constraints will help you maximise the potential of your AI solutions.

The post Overcome Azure AI Services Limits: A Practical Guide first appeared on AzureTechInsider.