Bend the Curve on Innovation

May 16, 2025Cumulative Update #19 for SQL Server 2022 RTM

May 16, 2025<

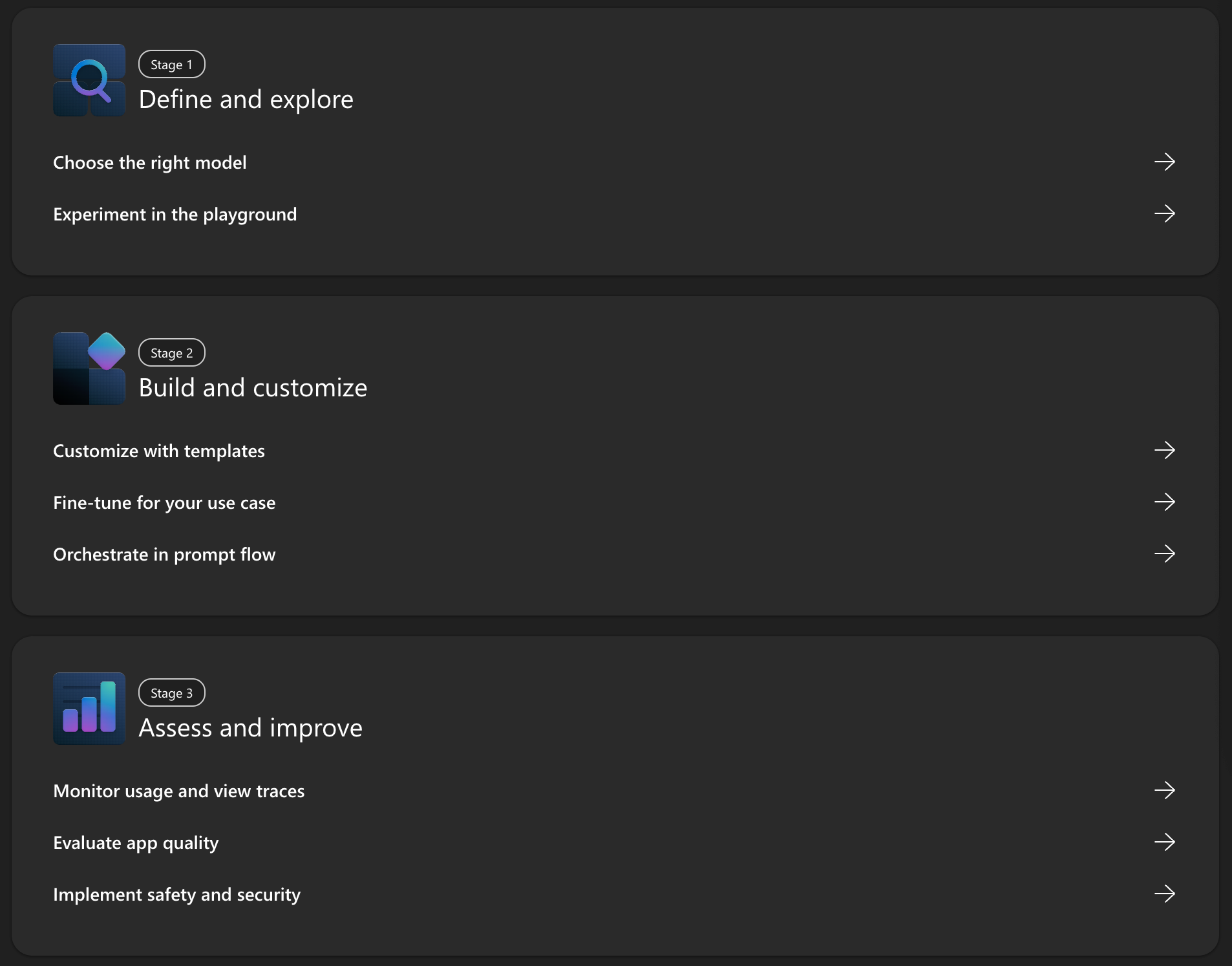

Note how each of these stages serves a purpose in the journey from idea to production!

In this post, Im going to explore the use-cases for Azure AI Foundry by walking through each stage in turn.

Once you know why youd make use of each stage, youll start to see the real power of AI Foundry as a wider platform.

Define and Explore

Before you build anything, you need to choose the right model and thats where Azure AI Foundry really sets itself apart, thanks to two key capabilities: the Model Catalogue and the Playground.

Model Catalogue

At the time of writing, the catalogue includes 1,987 models!! From OpenAI, Meta, Mistral, DeepSeek, and others all accessible in one place through a unified interface.

The real benefit is that you dont need to integrate with third parties or set up separate accounts and environments to use non-Microsoft models. You can also search, filter, and rank options against metrics such as quality, cost, and latency helping you find the right fit without guesswork or hours of R&D.

Why it matters:

-

Access top models across providers without leaving Azure

-

Use leaderboards to evaluate quality, cost-efficiency, and throughput

-

Avoid vendor lock-in with consistent interfaces for every model

Example:

Youre building a chatbot for customer support. You need something that can:

-

Respond conversationally and accurately

-

Return answers quickly (throughput)

-

Stay within budget

Using the catalogue, you can shortlist models, compare them side-by-side, and pick one based on actual performance data not just a brand name.

Playground

Think of the Playground as your safe space to get hands-on whether you’re working with text, speech, vision, or something more specialised.

And its not just for chatbots. There are dedicated playgrounds for:

-

Agents build AI agents grounded in your enterprise data that can act independently

-

Audio test native speech-to-speech and voice-based experiences

-

Images generate visuals from text prompts using models like DALLE

-

Speech, Language & Translation summarise text, answer questions, or translate between languages

Once youve picked a model, you can start interacting with it trying prompts, tuning system instructions, uploading data, and refining the experience all within the same portal.

Why it matters:

-

Everything happens in one place no infrastructure to spin up

-

Supports a wide range of AI tasks, not just conversational use-cases

-

You can test grounding, flow, tone, latency, and more

Example:

Youre building an insurance claims assistant. In the Playground, you can test whether a model can understand policy language, summarise complaints, and respond appropriately all before writing any code.

Theres a clear pattern forming here: pick a model, try it out, then

Build and Customise

Once you’ve identified the right model, Foundry provides everything you need to shape it into a working solution. This stage is where ideas become applications.

Agents

If you want AI that does more than just respond to prompts like fetching data, making decisions, or calling APIs Agents give you that flexibility within a structured workflow.

Why it matters:

Great for building multistep processes that need real-time decisions and data handling.

Example:

A user asks a chatbot, Wheres my order? Behind the scenes, agents could:

-

Call an API to get the status

-

Determine if a refund is needed

-

Respond with a personalised update all automatically

Templates

Templates are code-first samples hosted on GitHub, with pre-written instructions and scripts ready to deploy locally, in containers, or GitHub Codespaces.

Why it matters:

They help you go from experiment to pro-code implementation fast especially when you want to integrate a model into a real application.

Example:

You want to quickly test a multi-agent use-case that combines order tracking with sentiment analysis. Instead of starting from scratch, you deploy a GitHub-hosted template that wires everything together with minimal setup.

Fine-tuning

Fine-tuning allows you to retrain a model on your own data so it adapts to your domain. This is different from grounding, which connects a model to external data at runtime without changing how the model is trained.

Think of it like this:

-

Grounding is real-time you point the model to fresh data each time its used

-

Fine-tuning is permanent you change how the model behaves based on your own examples

Why it matters:

Fine-tuning lets you customise a model to better reflect your domain, tone, and terminology – so it can generate responses that are more accurate, aligned with your brand, and tailored to your users.

Example:

You run a financial services chatbot. By fine-tuning the model on your internal policies and product names, it gives more accurate and compliant responses without needing long prompts every time.

Content Understanding

Content Understanding uses AI and services like Azure AI Search to extract meaningful, structured, machine-readable data from unstructured sources such as text, images, audio, or documents.

Why it matters:

Perfect for automating document processing, turning messy data into searchable content, and helping AI find the right information to generate accurate responses.

Example:

You upload scanned contracts, meeting transcripts, and product datasheets. Content Understanding processes these files, extracts key details like clauses, deadlines, and names, and makes them searchable ready to power chatbots or automated review systems.

Prompt Flow

Prompt Flow is a visual tool for designing and testing AI workflows by combining models, logic, and data into repeatable steps.

Why it matters:

Prompt Flow gives you a faster, more reliable way to turn prompt experiments into real applications. Instead of managing scattered scripts and logic, you can design, test, and iterate on AI workflows in one place with visibility, version control, and deployability built in.

Example:

You build a flow that takes customer feedback, summarises it using a model, runs sentiment analysis via a Python script, and stores the result in a database all within a single visual workflow.

At this stage, youve picked a model, tested it, and now built it into something usable. But before its truly production ready, theres more to consider

Assess and Improve

Building an AI application is one thing keeping it reliable, safe, and effective is another. Foundry supports this with tracing, evaluation, and Responsible AI tooling.

Tracing

Tracing gives you detailed visibility into how your AI application runs behind the scenes. It captures each step in a prompt flow including model calls, API interactions, function executions, and data handling and presents it as a visual timeline using Azure Application Insights.

Why it matters:

Tracing helps you understand exactly how your AI flow behaves which is critical when diagnosing issues, identifying performance bottlenecks, or validating that your logic is working as expected. It saves hours of guesswork and gives you confidence that your app will behave reliably in production.

Example:

Youve built a multi-step chatbot that fetches data from several APIs. A user reports slow responses. Using tracing, you discover a third-party API call is consistently delaying the flow allowing you to pinpoint and fix the issue quickly.

Evaluation

Foundry’s evaluation tooling lets you measure how well your application is performing using manual or automated evaluators. These are designed to test the quality, safety, and reliability of the outputs generated by your models, datasets, or prompt flows.

You can choose from Microsoft-curated evaluators like:

-

Groundedness Evaluator checks how well the response stays tied to source data

-

Toxicity and Violence Evaluators flag unsafe content

-

Relevance, Similarity, and Retrieval Evaluators assess response quality

-

Hate-and-Unfairness Evaluator monitors bias and discrimination

-

Indirect Attack Evaluator part of red-teaming scenarios

All evaluators can be run manually, automated in CI/CD pipelines, or used during development to fine-tune quality before release.

Why it matters:

Evaluation helps you build trustworthy AI. It gives you clear metrics for performance and safety, helps you choose the best model or prompt for your scenario, and ensures consistent quality as your application evolves.

Example:

You’re testing two prompt flows for summarising customer emails. By using the Relevance and Groundedness evaluators, you can see which one produces more accurate summaries, and automatically flag hallucinated content before your app ever goes live.

Safety and Security

Foundry includes built-in tools to help you deliver AI responsibly by identifying, measuring, and managing risk throughout your apps lifecycle.

The process is structured around three key stages:

-

Map potential issues like misuse, bias, or unsafe content using red-teaming and testing

-

Measure how frequently risks occur and how severe they are, using test datasets and evaluation metrics

-

Manage risk in production with layered mitigation plans including filters, grounding, system prompts, and operational monitoring

Why it matters:

When you’re shipping AI into the real world, trust is everything. Foundry helps you catch issues early, prove your system is safe, and stay ahead of regulatory or reputational risks without having to build your own safety stack from scratch.

Example:

You’re about to launch a chatbot that handles customer queries. With Foundry, you can simulate harmful prompts, evaluate how the model responds, and configure output filters and safety layers to prevent misuse all before your users ever see it.

There we go youve picked a model, tested it, built it into something usable, and now youve embedded it into your application, knowing you can keep a close eye on it.

Conclusion

Lets imagine for a moment that Azure AI Foundry didnt exist. Youd be evaluating models from multiple providers, stitching together tools, and figuring out how to maintain visibility, security, and compliance at every step. Even spinning up a simple AI-enabled app would be time-consuming and complex.

Azure AI Foundry changes that. It brings everything into one unified platform so you can move faster, stay consistent, and go from prototype to production with the guardrails already built in.

Thats the real value of Foundry: the heavy lifting is done for you. Everythings under one roof and deeply integrated with the Azure ecosystem. You get consistency, speed, and security so you can focus on building, not bolting things together.

Disclaimer: The views expressed in this blog are my own and do not necessarily reflect those of my employer or Microsoft.

]]>