AI Innovation with Azure from Concept to Creation with Harshavardhan Bajoria

May 29, 2025TLS Inspection in Microsoft Entra Internet Access Deep Dive

May 30, 2025<

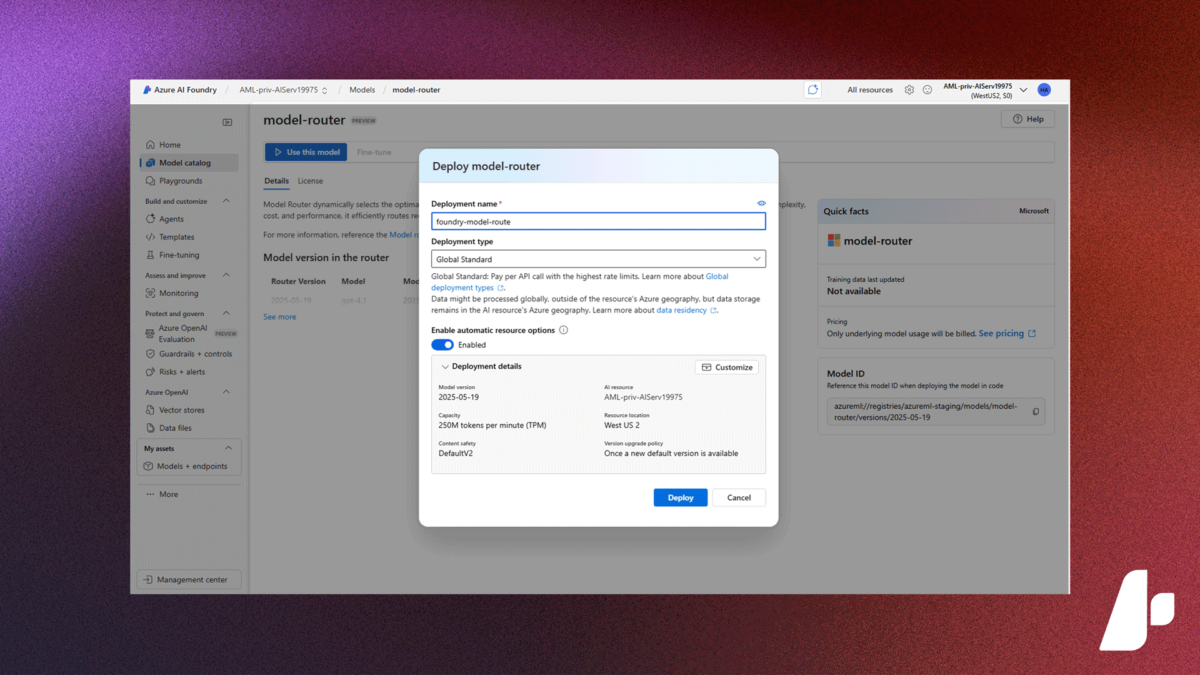

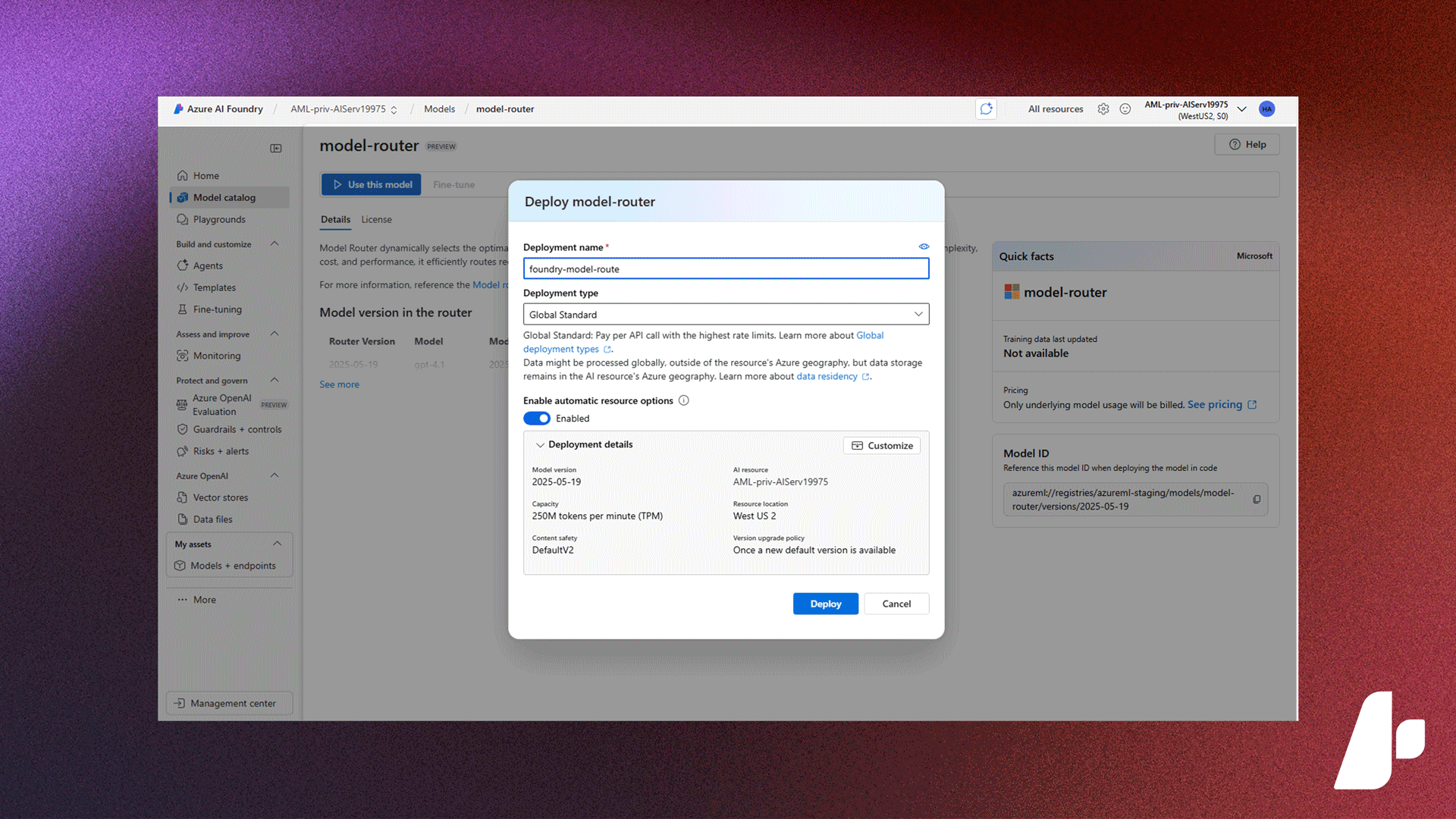

What it is:

This one is really cool! The Model Router is a new feature that automatically selects the most suitable Azure OpenAI model for your specific prompt, optimising for performance and cost.

By evaluating factors like query complexity, cost, and performance, it intelligently routes requests to the most suitable model.

Why it matters:

-

Reduces the need for manual model selection

-

Enhances response quality by choosing the best-fit model

-

Optimises costs by selecting the most efficient model

Example use cases:

-

Dynamic selection between GPT-4 and GPT-3.5 based on prompt complexity

-

Automatically routing image-related prompts to vision models

-

Selecting lightweight models for simple tasks to save costs

More Models & More Capacity

What it is:

Soooo many models! In Azure AI Foundry, there are models sold directly by Microsoft, and models from third parties. The update here is that you can now use models from AI focussed tech companies xAI, Black Forest Labs, and Hugging Face.

On top of that, Microsoft is extending reserved capacity to cover Azure OpenAI and select Foundry Models (including Black Forest Labs, and xAI). This means you get consistent performance even when demand spikes.

Why it matters:

-

Huge increase in model choice – over 11,000!

-

Consistent performance under load with reserved capacity options

-

Fine-tune and experiment without infrastructure overhead

Example use cases:

-

Experiment with emerging models in development, then scale to production seamlessly

-

Ensure consistent response times for AI services during high-traffic periods

Foundry Local

What it is:

Foundry Local brings Azure AI Foundry capabilities directly to your own infrastructure whether thats a developer workstation, edge device, or air-gapped data centre. This includes support for offline execution! Imagine interacting with an AI chatbot as a Windows app on your laptop – even when completely offline!

Why it matters:

-

Enables AI use in scenarios with strict data privacy or sovereignty requirements

-

Delivers sub-second response times without a cloud round-trip

-

Supports hybrid and offline environments with no connectivity needed

Example use cases:

-

Run AI models at the edge in manufacturing, retail, or healthcare settings

-

Enable offline document processing or vision capabilities on laptops

-

Deploy secure, private agents in government or defence environments

Fine-Tuning & Developer Tier

What it is:

Fine-tuning has traditionally been tricky not just technically, but also in terms of cost and where you could actually run it. Thats changing.

Fine-tuning allows you to retrain a model on your own data so it adapts to your domain. This is different from grounding, which connects a model to external data at runtime without changing how the model is trained.

With the public previews of Global Training and the new Developer Tier, Azure AI Foundry is making fine-tuning more accessible than ever. You can now fine-tune the latest Azure OpenAI models in new worldwide regions, with lower pricing designed specifically for experimentation and iteration.

Global Training handles the infrastructure behind the scenes and the Developer Tier removes the upfront hosting cost, so you only pay when you actually train or use a model.

Why it matters:

-

Run fine-tuning closer to your data with expanded regional support

-

Experiment more freely with reduced pricing

-

Skip the infra setup Foundry handles it for you

Example use cases:

-

Trial multiple fine-tuning approaches in a low-cost environment before committing to production

-

Fine-tune a model in-region to meet data residency requirements for financial or healthcare data

-

Quickly test how adding domain-specific examples affects summarisation performance – without setting up infrastructure

-

Build and evaluate early-stage agent prototypes on the Developer Tier, then scale seamlessly to production using the same workflows

Multi-agent Orchestration

What it is:

Azure AI Foundry now includes native tools for designing and coordinating multiple AI agents within a single system. This goes beyond prompt chaining – agents can now have distinct roles, shared memory, and coordinated workflows, all managed within Foundry. I need to write about this, and Agents in general, in a lot more detail!

As part of this, Microsoft also introduced an agent catalogue: a growing library of pre-built, configurable agents for common tasks like retrieval, planning, evaluation, and summarisation. You can use them as-is or customise them to fit your specific needs.

Why it matters:

-

Enables more advanced, multi-step AI use cases

-

Removes the need for custom orchestration logic

-

Encourages modular, maintainable agent design

-

Supports real collaboration between specialised agents

Example use cases:

-

A planning agent delegates tasks to retrieval, generation, and validation agents

-

A multi-agent customer service flow handles triage, resolution, and escalation

-

A compliance assistant splits work across extraction, analysis, and reporting agents

-

A document workflow uses separate agents to summarise, translate, and format content

Identity for Agents

What it is:

Microsoft Entra Agent ID is a new capability that brings enterprise-grade identity and access management to AI agents. Just like youd give an app or service a managed identity, you can now give AI agents their own secure, verifiable identity within your organisation.

This allows agents to authenticate, authorise, and operate securely across your systems, with support for auditing, policy enforcement, and lifecycle management – all integrated with Microsoft Entra.

Why it matters:

-

Secures agent interactions with APIs, data, and enterprise resources

-

Enables RBAC and policy enforcement for AI agents

-

Improves traceability and auditing of agent actions

-

Aligns agent behaviour with existing identity and governance frameworks

Example use cases:

-

An AI agent authenticates with Entra to access SharePoint or Microsoft Graph

-

Different agents have scoped permissions based on function – e.g. read-only vs full write access

-

Agent activity is logged and monitored alongside human and app identities

Azure AI Foundry Observability

What it is:

Azure AI Foundry Observability is a unified solution for governance, evaluation, tracing, and monitoring. It brings real-time visibility into models, agents, workflows, and user interactions – all from a single view.

In my last post, I briefly described evaluation and monitoring in Foundry. Ive not had chance to look at this in greater detail as yet, but as I understand this announcement, Foundry Observability combines what existed before (within Foundry itself), such as live request tracing, model metrics, agent behaviours, evaluation results, etc – but now fully integrated with Azure Monitor, Application Insights, and the Foundry portal itself.

Why it matters:

-

One integrated view – no more tool sprawl

-

Real-time tracing and evaluation across agents and models

-

Built-in governance features to support audits and responsible AI

-

Makes it easier to go from prototype to production with confidence

Example use cases:

-

Trace a user request across multiple agents in a multi-turn workflow

-

Set alerts when accuracy or safety thresholds are breached

-

Track agent behaviour over time to spot drift or unexpected changes

-

Export evaluation logs for compliance or internal reviews

And The Rest!

Theres a lot I havent covered here – from updates to Agentic Retrieval in Azure AI Search, to Agent Evaluators, and improvements to the Foundry API and SDK.

Ive also noticed growing overlap with tools like Copilot Studio and Azure Logic Apps, which adds more capability – but also more complexity. Its a lot to take in, but I hope this post has helped you cut through the noise and focus on whats new and important in Azure AI Foundry.

The link below (click the image) will take you to another excellent write-up, and the Build Book of News is another fantastic resource for exploring the full range of announcements.

If youd prefer to see it all in action, I highly recommend the Build session: Azure AI Foundry: The AI App and Agent Factory – complete with demos of many of the updates mentioned here.

Disclaimer: The views expressed in this blog are my own and do not necessarily reflect those of my employer or Microsoft.

]]>