Quantum Computer for Everyone

April 14, 2025[In preview] Public Preview: Managed Identity for Azure Red Hat OpenShift in Public Preview

April 15, 2025In my recent blog series Private Link reality bites I briefly mentioned the possibility of inspecting Service Endpoints with Azure Firewall, and many have asked for more details on that configuration. Here we go!

First things first: what the heck am I talking about? Most Azure services such as Azure Storage, Azure SQL and many others can be accessed directly over the public Internet. However, there are two alternatives to access those services over Microsoft’s backbone: Private Link and VNet Service Endpoints. Microsoft’s overall recommendation is using private link, but some organizations prefer leveraging service endpoints. Feel free to read this post on a comparison of the two.

You might want to inspect traffic to Azure services with network firewalls, even if that traffic is leveraging service endpoints. Before doing so, please consider that sending high-bandwidth traffic through a firewall might have cost implications and impact on the overall application latency. If you still want to go ahead, this post is going to explain how to do it.

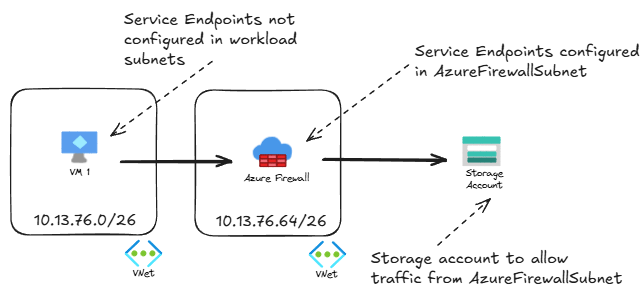

The design

Service endpoints have two configuration parts:

- Source subnet configuration to tunnel traffic to the destination service.

- Destination service configuration to accept traffic from the source subnet.

The key concept to understand is that if traffic from the client is going to be inspected by a firewall before going to the Azure service, then the source subnet is actually the Azure Firewall’s subnet, not the original client’s subnet:

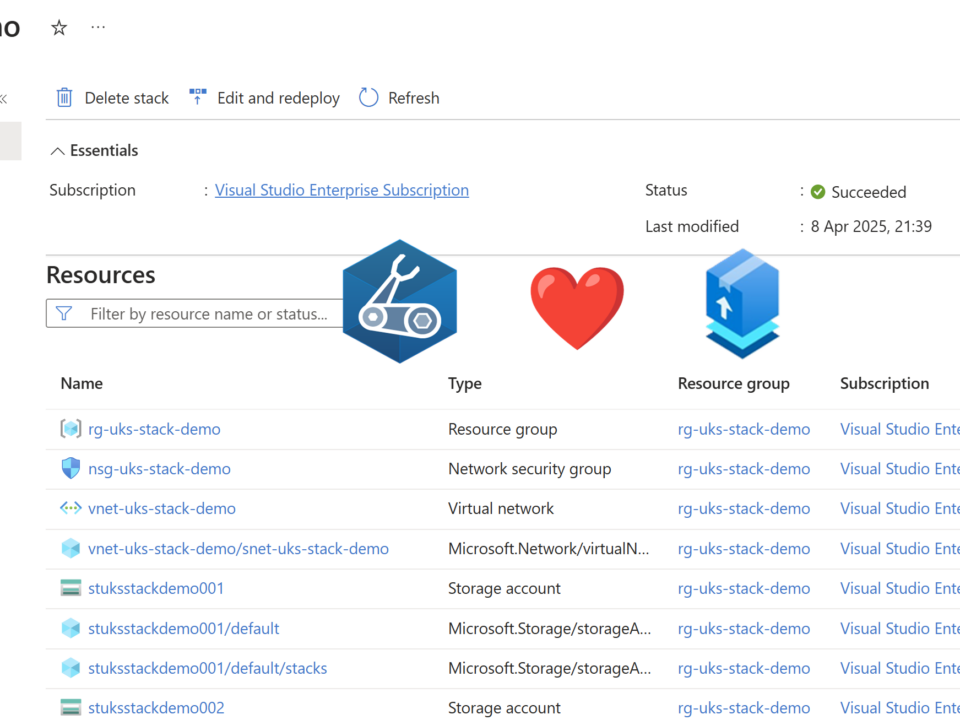

You can configure service endpoints for a specific Azure service on a subnet using the portal, Terraform, Bicep, PowerShell or the Azure CLI. In the portal this is what it looks like:

For Azure CLI, enabling service endpoints for Azure Storage accounts in all regions would look like this:

subnet_name=AzureFirewallSubnet

az network vnet subnet update -n $subnet_name --vnet-name $vnet_name -g $rg

--service-endpoints Microsoft.Storage.Global

-o none --only-show-errors

You would then configure your Azure services to accept traffic coming from the Azure Firewall subnet. For example, for Azure Storage Accounts this is what you would see in the portal:

Network rules or Application rules?

Ideally you should use Application Rules in your firewall to make sure that your workloads are accessing the right Azure services, and not exfiltrating data to rogue data services that might be owned by somebody else and still could have the same IP address.

This is an example of a star rule granting access to all Azure Storage Accounts, but you should specify your own:

I tested with two storage accounts, one in the same region and another one in a different region than the client (the client being in this case the Azure Firewall). Access to both storage accounts is working, and as you can see both accesses are logged in the Storage Account as well as in the Azure Firewall (I have removed some characters from the storage account names to obfuscate them):

❯ ssh $vm_pip "curl -s4 $storage_blob_fqdn1" Hello world! ❯ ssh $vm_pip "curl -s4 $storage_blob_fqdn2" Hello world! ❯ query='StorageBlobLogs | where TimeGenerated > ago(15m) | project TimeGenerated, AccountName, StatusCode, CallerIpAddress' ❯ az monitor log-analytics query -w $logws_customerid --analytics-query $query -o table AccountName CallerIpAddress StatusCode TableName TimeGenerated ---------------------- ----------------- ------------ ------------- ---------------------------- storagetest????eastus2 10.13.76.72:10066 200 PrimaryResult 2025-04-14T09:12:59.2174817Z storagetest????westus2 10.13.76.72:11880 200 PrimaryResult 2025-04-14T09:13:03.3861533Z ❯ query='AzureDiagnostics | where TimeGenerated > ago(15m) | where Category == "AZFWApplicationRule" | project TimeGenerated, SourceIP, Fqdn_s, Protocol_s, Action_s' ❯ az monitor log-analytics query -w $logws_customerid --analytics-query $query -o table Action_s Fqdn_s Protocol_s SourceIP TableName TimeGenerated ---------- -------------------------------------------- ------------ ---------- ------------- --------------------------- Allow storagetest????eastus2.blob.core.windows.net HTTPS 10.13.76.4 PrimaryResult 2025-04-14T09:12:59.193852Z Allow storagetest????westus2.blob.core.windows.net HTTPS 10.13.76.4 PrimaryResult 2025-04-14T09:13:03.138999Z

The storage account sees as client IP the Azure Firewall’s IP (in the subnet 10.13.76.64/26), and the Azure Firewall logs show the actual client IP (in the workload subnet 10.13.76.0/26).

If you use network rules you would lose a lot of the flexibility of the Azure Firewall, even if using FQDN-based rules. The reason is that if there were 2 storage accounts with different FQDNs sharing the same IP address, and the same client resolves both FQDNs, when Azure Firewall looks at the packet it will not be able to guess to which storage account each specific packet belongs to. From a routing perspective it would still work though, as long as you have the default SNAT settings of Azure Firewall, which involves translating the IP address when public IP addresses are involved:

Conclusion

There are some reasons why you might want to pick VNet service endpoints over private link (cost would probably be one of them). If so, there are advantages and disadvantages of sending traffic to Azure services via a firewall. If you decide to inspect traffic to VNet service endpoints with Azure Firewall, hopefully this post has shown you how to do that.

What are your thoughts about this?